I am a Founding Member of DatologyAI. Previously, I was a Staff AI Researcher at Google Deepmind where I worked on building fundamental Multimodal technology aimed at enabling new capabilities with LLMs. Before that, I spent almost 7 wonderful years at Facebook AI Research (FAIR) in New York, USA where I worked on computer vision and machine learning.

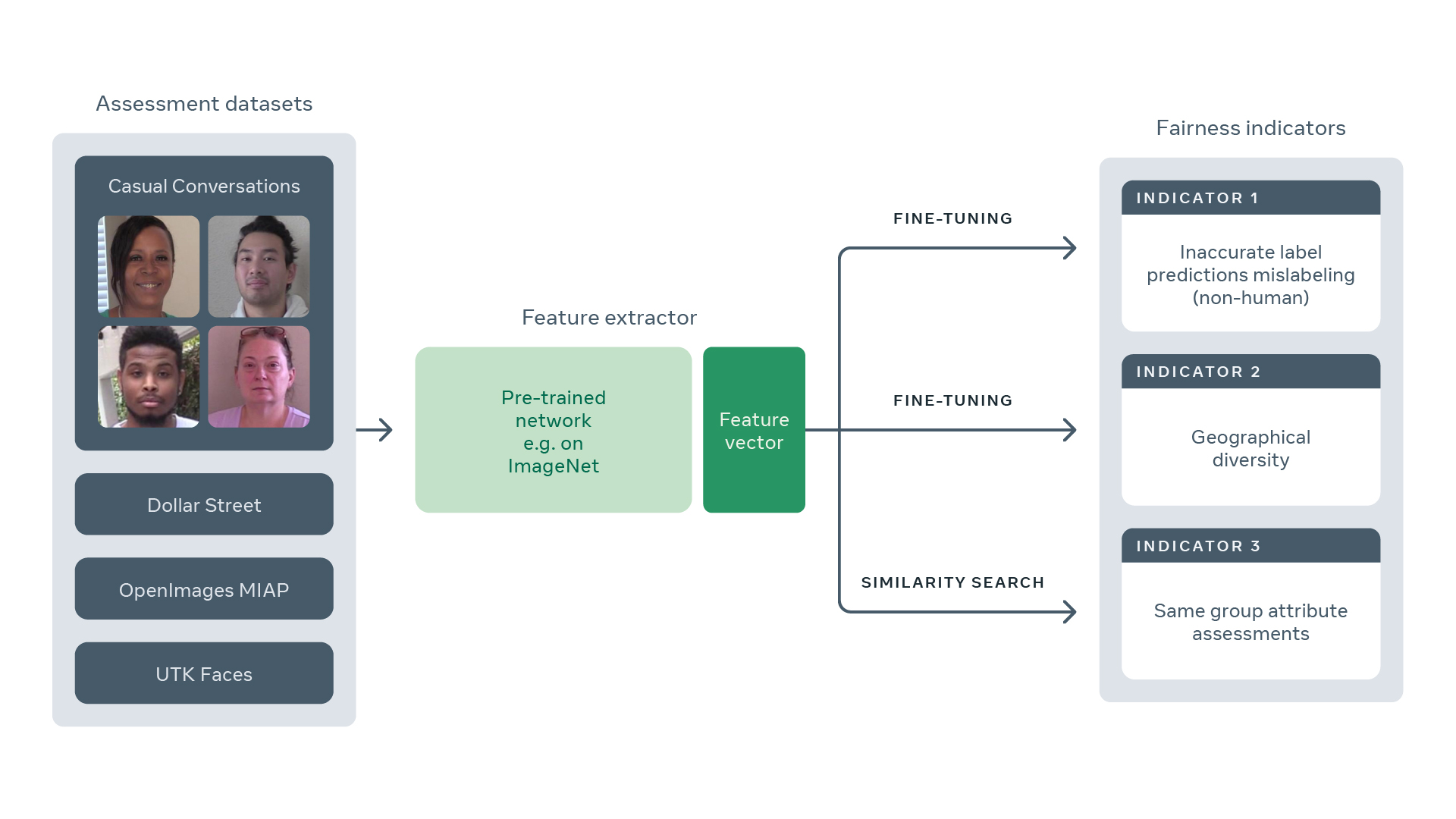

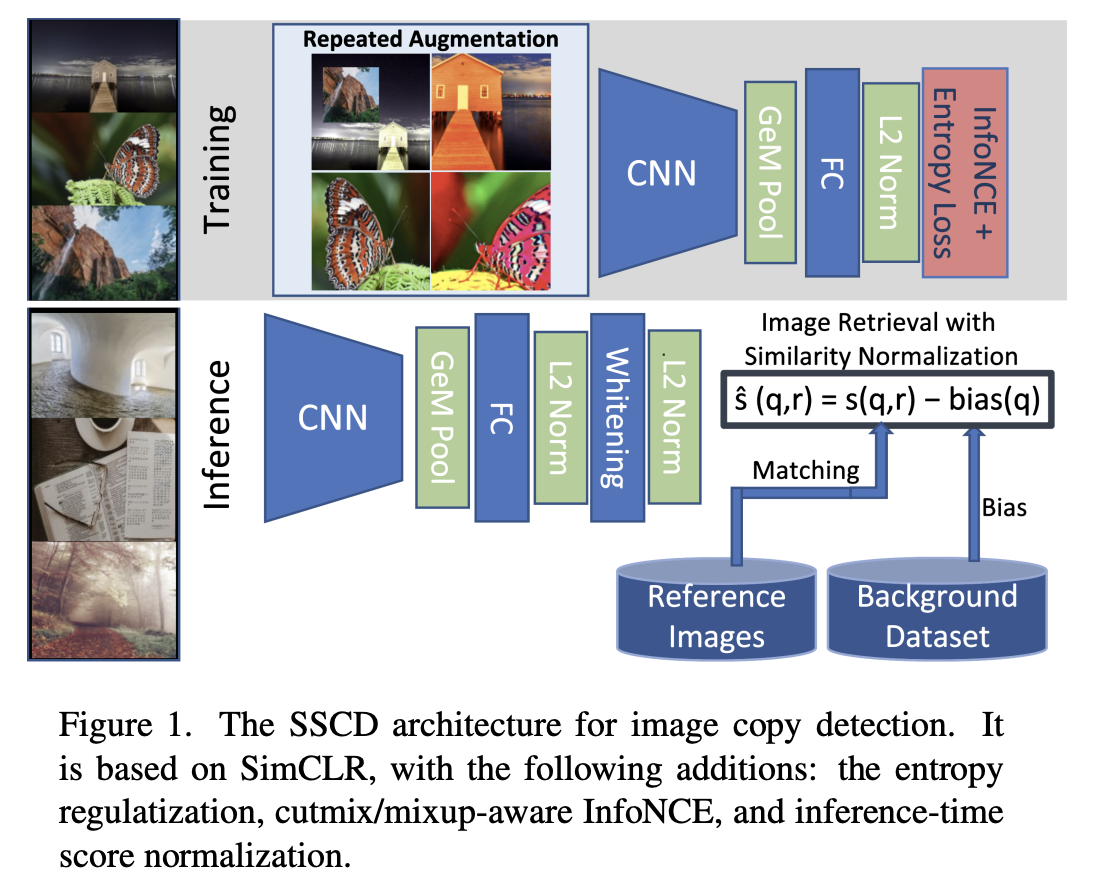

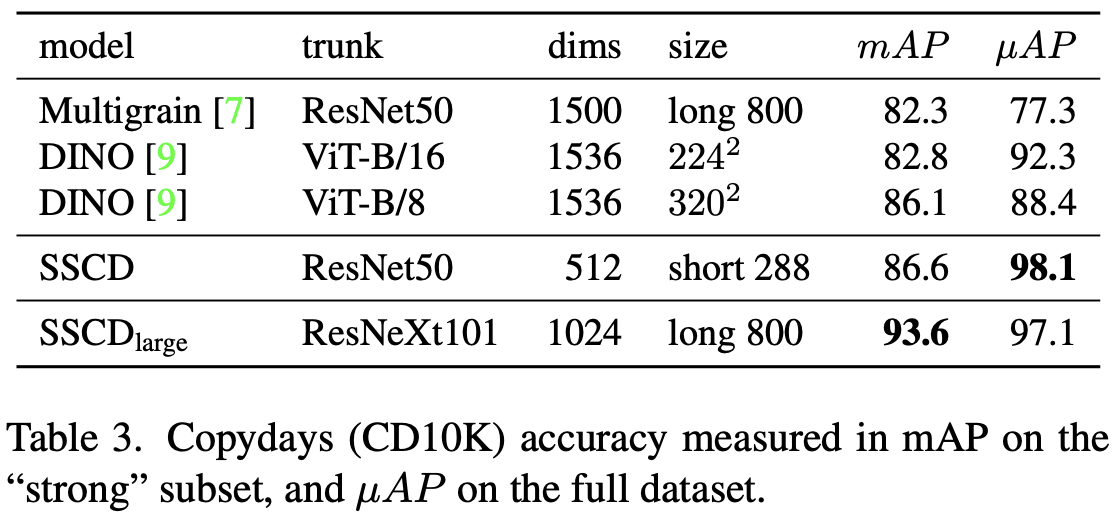

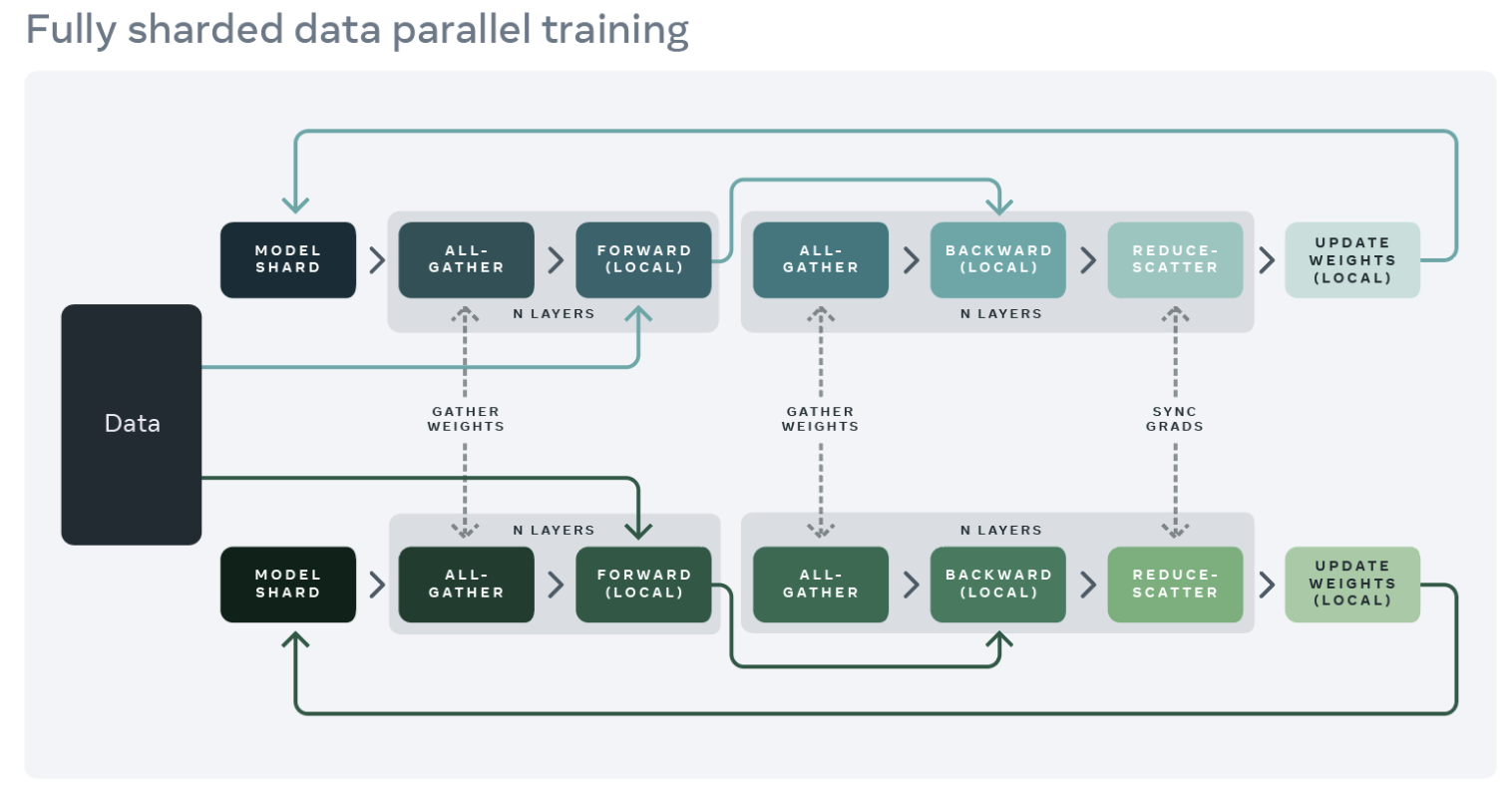

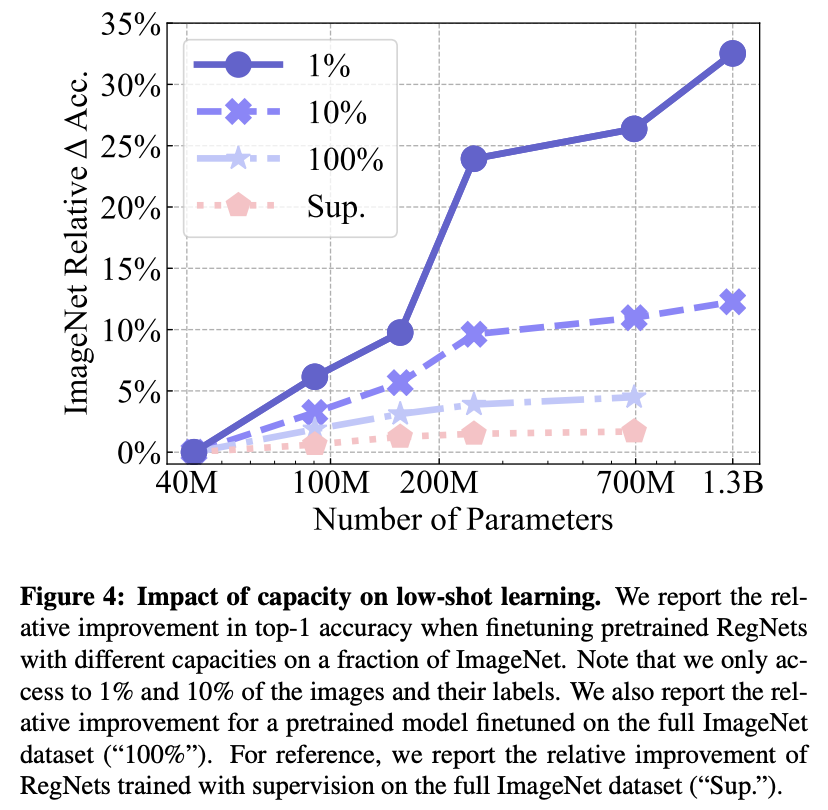

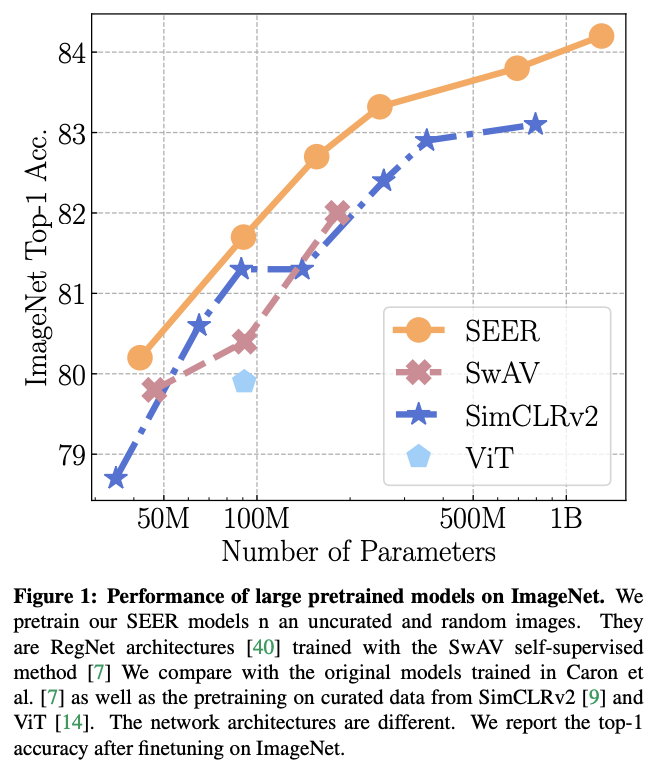

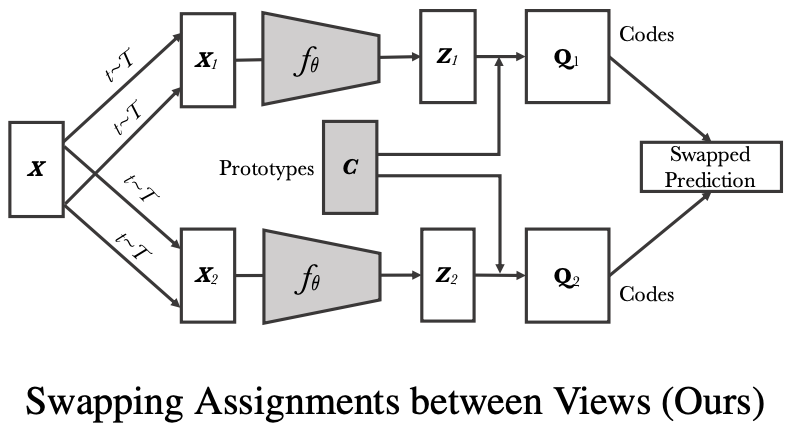

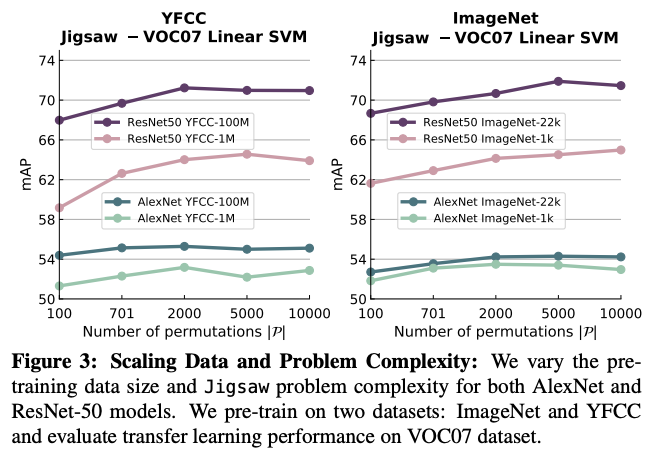

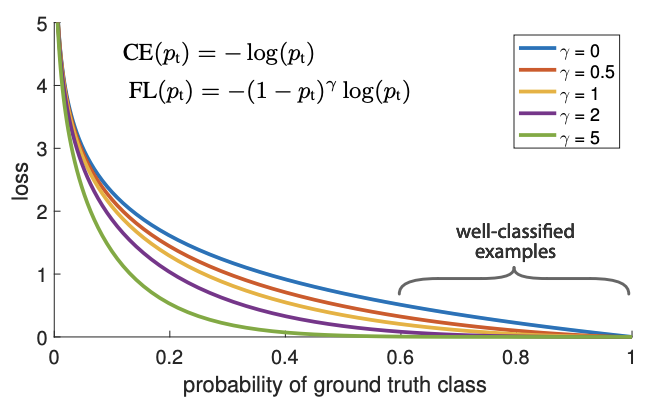

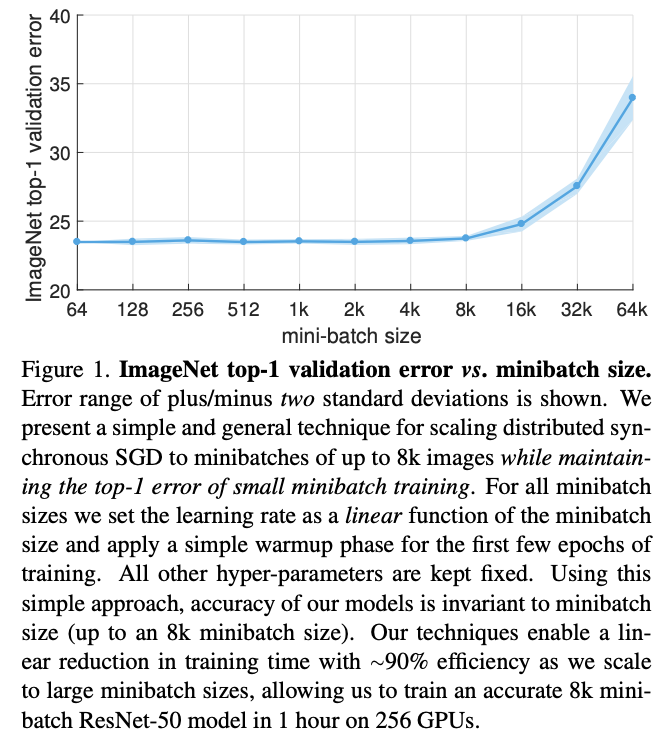

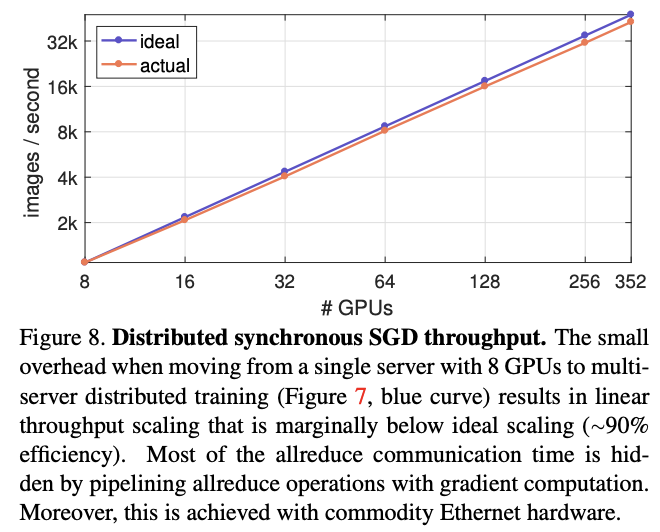

At FAIR, I led research on representation learning using self-supervision from uncurated datasets, training large-scale computer vision models (notable projects: ImageNet in 1 Hour and SEER 10 billion parameters self-supervised model) and building socially responsible AI models. I led the development of self-supervised learning library VISSL and am a recipient of the Best Student Paper Award for Focal Loss at ICCV 2017. I also led and organized the first ever self-supervised learning challenge at ICCV'19.

My research interests include language modeling, evaluations for language models, data curation / filtering, multimodal learning, computer vision, retrieval augmentation, personalized AI and developing socially responsible AI.